Custom Data Source

For most Dreamdata customers, the native data sources within Dreamdata solve all their data integration needs. However, in some cases, data exists in other standard or custom-built systems. This article will describe how that is done while covering the different use cases we usually see when needed.

This overview covers the following

- Use Cases

- Upload a custom CRM Source

- Upload custom Stage Objects

- Upload custom Event and Web-tracking Data

- Implementation Process

Use Cases

Below are the three use cases, each requiring different file types and schemas. All fields within these schemas are necessary, but you may leave some fields empty (null) if you don't have any data or if it doesn't apply to your context.

You might share multiple files or just one, depending on the use case.

Upload a custom CRM Source

Uploading a custom CRM Source is used for customers who are in one of the following situations

- You have a CRM not yet supported by Dreamdata

- Dreamdata natively supports Salesforce, HubSpot, Pipedrive, and MS Dynamics

- You have a self-hosted CRM such as Oracle, SAP or MS Dynamics

- You want to avoid hitting CRM API limits

- Consider if it's not cheaper and better to upgrade your CRM.

- You have sensitive data in your CRM, that you can't share with Dreamdata for privacy or contractual reasons. For example if you work with the public sector, healthcare, or other regulated industries.

- Your CRMs often have a complex permission model that can prevent Dreamdata from accessing sensitive data via the native CRM integration. Here, you can read more about Salesforce and HubSpot permission model.

Here, you can read more about the schema format when loading a custom CRM data source.

Upload custom Stage Objects

Uploading Custom Stage Objects is typically needed when you have your ERP system with information not else available in your CRM or when you have defined your ways of measurement as joins of multiple objects.

Here, you can read more about the schema format when loading custom Stage Objects.

Upload custom Event and Web-tracking Data

Uploading custom web-tracking is typically used when uploading data from tracking solutions Dreamdata does not yet support out of the box such as Snowplow, a home build or other tracking solution.

Uploading custom events are typically custom events coming from a service you are using, that Dreamdata does not yet support or is from your own build service, such as your product.

Here, you can read more about the schema format when loading events and web-tracking data.

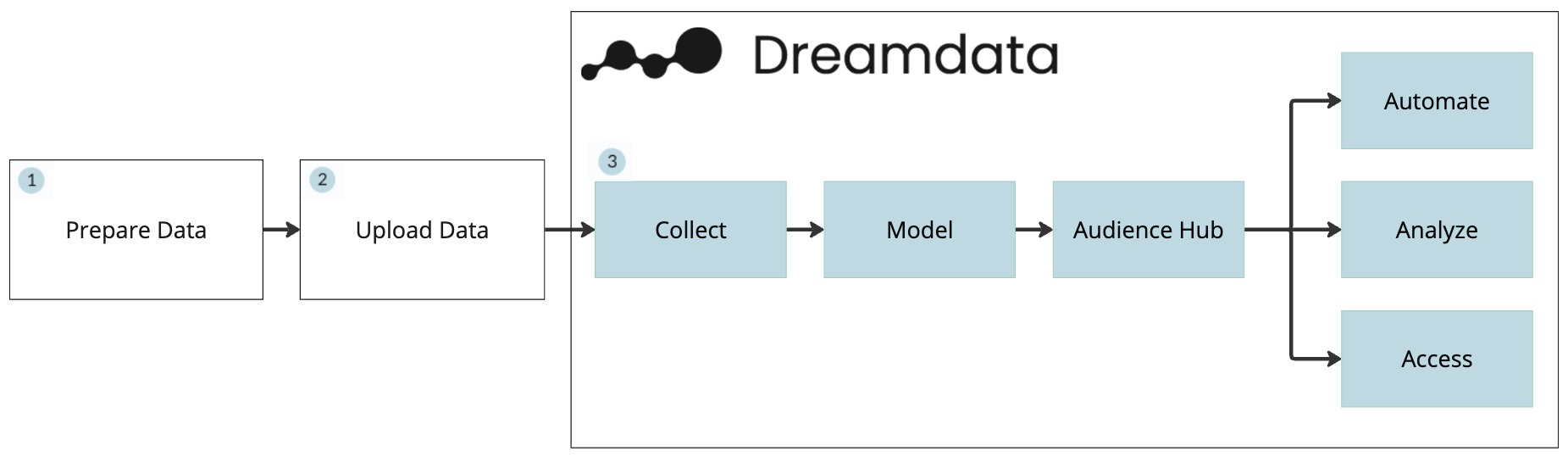

Implementation Process

- The customer prepares the data to upload.

- Schedule a data export

- Transform the data into the supported file formats.

- Compress the data using gzip.

- The customer upload the data.

- The data has to be uploaded to Google Cloud Storage or SFTP. Alternatively Dreamdata can also pull the data directly from your BigQuery (EU) instance.

- You gain access to upload through your Customer Success Manager.

- Here are code samples of how to upload data to Google Cloud Storage.

- If incremental uploads, structure the data in folders by type and date using Hive partition folder structure as below.

- /accounts/dt=2024-01-23/file_01.json

- /{data-type}/dt={date}/file_{number}.json

- If full load, overwrite files at each upload

- The data has to be uploaded to Google Cloud Storage or SFTP. Alternatively Dreamdata can also pull the data directly from your BigQuery (EU) instance.

- Dreamdata will pick up the latest based on your Data Model Schedule.

- By updated the data before the Data Model Scheduler, you ensure the data is as fresh as possible.

- Dreamdata recommends uploading the data daily unless the data changes infrequently.

File format

Files can be delivered in JSON Lines (.jsonl) Newline Delimited JSON (.ndjson), Parquet (.parquet) or CSV (.csv).

We recommend compressing the files using gzip to reduce data transfer.

Incrementally

It's possible to upload the data incrementally or upload full data batch each time.

We recommend doing what is the easiest for you.